// August 24th, 2012 // No Comments » // Artificial Intelligence

Since the creation of the artificial intelligence field, an AI has been judged for what it is able to do. Programs that can follow a conversation, that can predict where there is oil underground, that can drive autonomously a car… all of them are judged intelligent only based on their functional behavior.

To achieve the desired functionality, all types of tricks have been (and are) used and accepted by the AI community (and in most of the cases, they were not qualified as tricks): use large data structures that cover most of the search space, reduction of the set of words the AI has to recognize, or even asking the answer to a human through internet.

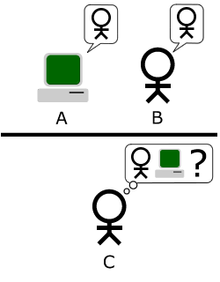

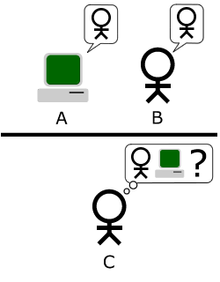

The Turing Test: C cannot see neither A nor B. A and B both claim that they are humans. Can C discover that A is an AI?

The intelligence of those systems could be measured by using the Turing test (adapted to each particular AI application): if a human cannot distinguish the machine from a person performing the same job, then it would be said that the machine is a successful AI. What this means is, if it does the job, then it is intelligent. And this kind of measure has lead to the kind of AI that we have today, the one that can win at chess to the best human player, but that it is not able to recognize a mug due to small changes in the light of the room.

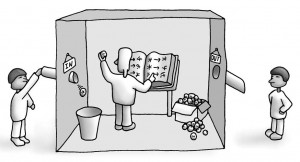

But not everybody agrees on that definition of AI. For instance, the Chinese room argument exposed by Searle, criticizes that such AI shows no real intelligence and never will if based on that paradigm.

In the Chinese room experiment, a man inside a room answers questions in Chinese by following the instructions provided on a book, without understanding a piece of Chinese

I do agree with Searle’s argument. I think that the problem here is that we are missing one important component of intelligence. From my point of view, an intelligent behavior can be divided into two components: what is done by the behavior and how the behavior is done

intelligence = what + how

The what: the intelligent behavior that one is able to do

The how: the way this intelligent behavior is achieved.

Turing test only measures one part of the equation: the what. 99.99% of AI programs today are based on providing a lot of weight to the what part of the equation. They think that, if enough weight is provided to that part, nobody will notice the difference (in terms of intelligence).

The reason for providing weight to the what part is because it is easier to implement, and furthermore we can measure it (that is, we can use the Turing test for the what measure). Since there is no way of measuring the how, and nobody has a clue about how humans actually do intelligent things, people just prefers to concentrate on the part that provides results in the short term: the what part. After all, the equation can have a large value by working on any of the two constituents…

However, I think that a real intelligent behavior requires of weights in both constituents of that equation. Otherwise we obtain unbalanced creatures that are far away from what we as humans are able to do in terms of intelligent behavior.

Here there are two examples of unvalanced intelligences:

The case of an intelligent behavior with only score on the what: this is the case of a guy who has to do an exam about quantum mechanics. He has not studied at all so he doesn’t know anything about the subject. He has, though, a cheat sheet (provided by the secretary of the teacher) that allows him to answer correctly all the exam questions. After having evaluated the exam of the guy, the teacher would say that he has mastered the subject. His knowledge about the subject is only being judged by what he has done (to answer the exam). We would say that he is very intelligent in the field of quantum mechanics, but actually, by observing how he answered the exam questions, we can see that he has no knowledge at all. He looks intelligent, but he is not.

The case of an intelligent behavior with most of its value on the how: this would be the case of the animals, any of them, ranging from the smallest ones to the closest to us in terms of intelligence. Animals do have a lot of how intelligence, related to the tasks that they are able to do, and they are not able to do so many things as us because their score in the what is lower.

The case of an intelligent behavior with most of its value on the how: this would be the case of the animals, any of them, ranging from the smallest ones to the closest to us in terms of intelligence. Animals do have a lot of how intelligence, related to the tasks that they are able to do, and they are not able to do so many things as us because their score in the what is lower.

Animals have low intelligence in the ‘what’ part but quite a lot in the ‘how’

Now, the question is how can we measure the how part?

That is a difficult matter. Actually, we do not have any kind of reliable measure for that even for humans. I would propose, that the how can be measured by measuring understanding. We decide how to do something based on our understanding of that thing and all the things related to it. When we understand something we are able to use/perform/communicate that something in different situations and contexts. It is our understanding the one what drives how we do things.

In this sense, sociologists have created experiments with infants that try to figure out what they understand and until which point they do understand [1][2]. Based on that, a scale based on the different stages of an infant development could be created and applied to AIs to measure their understanding. I would take human development as the metric for this scale, starting for zero equivalent to the understanding of a new born child, and ranging to 10 for the understanding of an adult. Then, the same tests can be applied to the machine in order to know its level of intelligence in the how part.

As a conclusion, I believe that intelligence is not about looking at a table and reading the correct answer (like in the case of chess, Go or the guy who cheats at the exam). Intelligence involves finding solutions with a limited amount of resources in a very wide range of different situations. This stresses the importance of how things are done in order to perform an intelligent behavior.

References:

[1] Jean Piaget, The origins of intelligence in children, International University Press, 1957

[2] George Lakoff and Rafael Nuñez, Where mathematics comes from, Basic Books, 2000

Basically, what his theory says is that feelings/sensations are not something that happens to us, but rather a thing that we do, and, what actually defines the object/color/sound/small/taste or feeling that one is having are the laws that govern how one interacts with it. As a conclusion: the brain is not the place where the feel is generated, but is in the sensorimotor interaction that is generated. The brain only enables the sensorimotor interaction that constitutes the experience of feel.

Basically, what his theory says is that feelings/sensations are not something that happens to us, but rather a thing that we do, and, what actually defines the object/color/sound/small/taste or feeling that one is having are the laws that govern how one interacts with it. As a conclusion: the brain is not the place where the feel is generated, but is in the sensorimotor interaction that is generated. The brain only enables the sensorimotor interaction that constitutes the experience of feel.