// October 29th, 2012 // No Comments » // Artificial Intelligence, Talks

The following post is a straight transcription of the speech with the same title I gave during the Robobusiness 2012 in Pittsburgh. You can find other details of the speech in this link.

You can use the text and images at your will but give credit to the author.

================================================

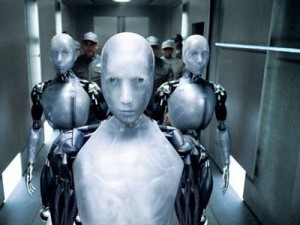

What is preventing us from having humanoid robots like these at home?

What is preventing us from having one at home?

What is preventing us from selling those robots?

We are still years away from those robots.

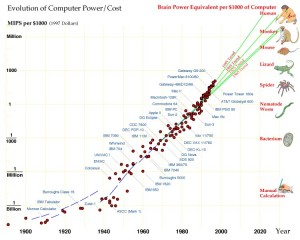

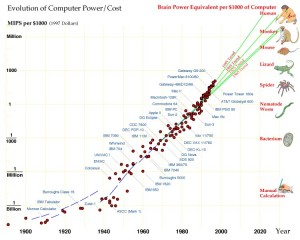

And there are many reasons for that, being one of them that robots are not intelligent enough. Some researchers say that the reason of this lack of intelligence is just a lack of CPU power and good algorithms. They believe that big supercomputers will allow us to run all the complex algorithms required to make a robot safely move through a crowd, make a robot recognize us from other people, or understand speech commands. For those researchers, it is just a matter of CPU which will be solved by year 2030 when CPU power will reach that of a human brain (according to some predictions).

But I do not agree.

This is a gnat.

A gnat is an insect that doesn’t even has a brain just a few nervous cells distributed along the body. Its CPU power (if we can measure that for an animal) is very small. However, the gnat can live in the wild, flying, finding food, finding mates, avoiding dangerous situations and finding the proper place for their siblings…and all of this along four months of life. Up to date there are no robots able to do what a gnat can do with the same computational resources.

I believe that considering artificial intelligence just a matter of CPU is a brute force solution to the problem of intelligence and discards an important part of it.

I call the CPU approach the easy path to A.I.

I believe there is another approach to artificial intelligence, one where CPU has its relevance, but is not the core. One where implementing cognitive skills is the key concept.

I call this approach the hard approach to A.I. I think that is the approach that is required in order to build those robots that we’d love to have.

In this post I want to show you:

– What is what I call the easy path to A.I

– Why do I believe this path will eventually fail in bringing the A.I required for service robots

– What is what I call the hard path to A.I. and why it is needed

The easy approach to A.I.

So, what is what I call the easy path to A.I.?

Imagine that you have a large database at your service to store all the data you compute about a subject, in the following way: if this happens then do that, if this else happens then do that.

There will be, though, some data that you cannot know for sure because there are some uncertainties in the information you have, or require very complex calculations.

For those cases you calculate probabilities of happening in the sense, if something like this happens then is very likely that this is the best option.

Then you take decisions based on those tables and probabilities. If you apply this method to your daily life, you will decide if what you are looking at is a book or is an apple, based on the data in the table or the probability. If you could compute the table and probabilities for all your world around you can take the best decision at any moment (actually the table is taking the decision for you, you are just following it!)

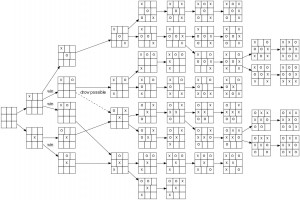

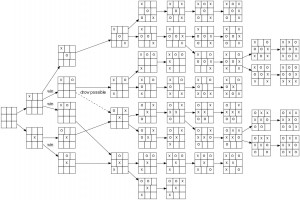

That is exactly the approach followed in computer board games, one of the first subjects where artificial intelligence was applied. For example in tic-tac-toe

You all know tic-tac-toe. You may also know that there exists the complete solution for the game. I mean, there is a table that indicates which one is the best movement for each configuration in the board.

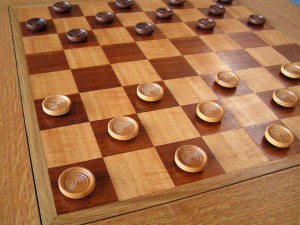

When the table of best movements for each combination is discovered/computed, it is said that the game has been solved. Tic-tac-toe is a solved game since the very beginning of AI, because of its simplicity. Another game that has been solved is checkers. The full table was calculated in 2007.

There are however some games that have not been solved yet, like for example go…

… or chess.

Those games have a lot more possible combinations of board configuration. They have so many that cannot be computed with the best supercomputers of the today. For instance, the game of chess has as many possible combinations as:

– 16+16 = 32 chess pieces and 64 fields, so 64!32! ≈ 4.8·10^53 combinations

Due to that huge number of possible combinations it is impossible (up to date) to build the complete table for all chess board combinations. There is not enough CPU power to compute such tables. However, complete tables already exist for board with any combination of 6 or less pieces.

So a computer playing chess can use the tables to know the best move when there are only 6 pieces on the board. What does the computer do when it is not in any of those situations of 6 pieces or less?. It builds another kind of table… a probabilistic one. It calculates probabilities based on some cost functions. In those cases, the machine doesn’t know which one is the best movement at any moment, but has a probability of which one is the best. The probabilities are built based on the knowledge of a human.

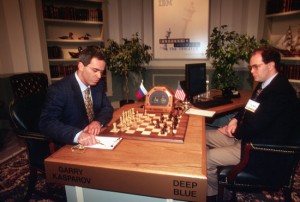

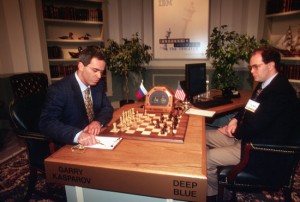

Using this approach it has been possible for a computer to win the best human player in the world.

However, for me this approach makes no sense if what we are talking is about a system that knows what is doing and uses this knowledge to perform better.

You can tell me that who cares about it, if at the end they do correctly the job (and even better than us!). And you may be right, for only this particular example of chess.

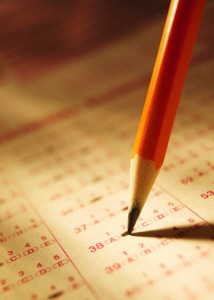

The table approach is like if you provide to a student the table with all the answers for all the exams that he will have to study. Of course he will do it perfectly! Any person would do it. But at the end, the student knows nothing and understands less.

Why more CPU (the easy approach) may not be the solution

But anyway, the CPU approach is exactly what most A.I. scientist think about when talking about constructing intelligent systems.

Given the success obtained with board games, they started to apply the same methodology to many other A.I. problems like speech recognition or object recognition. Hence the problem of constructing an AI has become then a race for resources that allow bigger tables and more calculation, attacking the problem in two front lines: in one side, developing algorithms that can construct those tables in a more efficient way (statistic methods are winning at present). In other side, constructing more and more powerful computers (or using networks of them) to provide more CPU power for those algorithms. This methodology is exactly what I call the easy AI.

But do not misunderstand me, even if I call it the easy approach, it is not easy at all. This approach is taking some of the best minds in the world to solve those problems. But I call like this because is a kind of brute force approach, and because it has a clear and well defined goal.

And because it is successful, this approach is being used in many real systems.

The easy AI solution is the approach used for example in the Siri voice recognition system.

Or the approach of the Google goggles (object recognizer system).

Both cases use the power of a network of computers connected together to calculate what has been said or what has been shown to recognize.

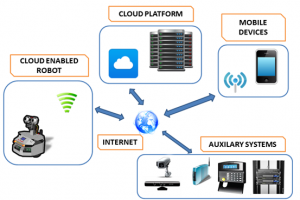

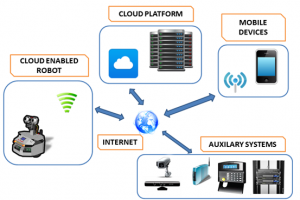

And the idea of using networks of computers is so successful, that a new trending following those ideas has appeared for robots called cloud robotics, where robots are connected to the cloud and have the CPU of the whole cloud for its calculations (among other advantages, like shared information between robots, extensive databases, etc…)

That is exactly how driverless cars of Google can be driven, by using the cloud as a computer resource.

And that is why cloud robotics is being seen as a holy grial. A lot of expectations are put in this CPU capacity, and it looks (again) like A.I. is just a matter of having enough resources for complex algorithms.

I don’t think so.

I don’t think that the same approach that works for chess will work for a robot that has to handle real life situations. The table approach worked with chess because the world of chess is a very limited world compared with what we have to live when handling speech recognition, face recognition or object recognition.

Google cars do not drive at the high way during rush hour (up to my knowledge).

Google goggles makes as many mistakes as correct detections when yo use it in a real live situation.

And Siri doesn’t handle the problem of speech recognition in real life because it uses a mic close to your mouth.

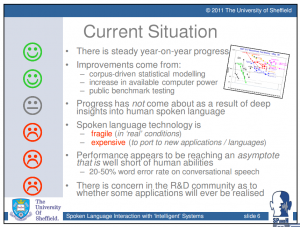

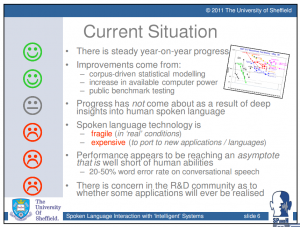

Last February I was at the euCognition meeting in Oxford. There I attended to a talk given by professor Roger Moore, an expert in speech recognition systems.

In his talk, professor Moore suggested that in the last years, even if some improvement was made due to increase in CPU power, speech recognition seems to have reached a plateau of error rate, between a 20% and a 50%. This means, even if CPU power has been increasing along the years, and the algorithms for speech recognition been made more efficient, no significant improvement has been obtained, and worst of all, some researchers are starting to think that some speech problems will never be solved.

After all, those speech algorithms are only following their tables and statistics, and leave all the meaning outside of the equation. They do not understand what they are doing, hearing or viewing!.

He ended his talk indicating that a change in the paradigm may be required.

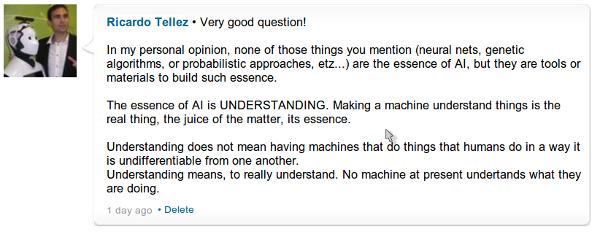

Of course, professor Moore was talking about including more cognitive abilities in those systems. And from all cognitive abilities I suggest that the key one is understanding.

Understanding as a solution, the hard approach

What do I mean by understanding? That is a very difficult question that I can’t answer straight. What I can do is to show what I mean by using a couple of examples.

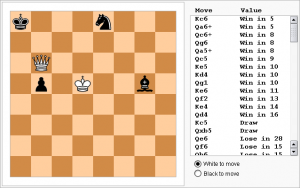

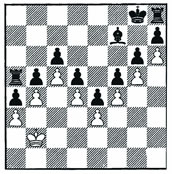

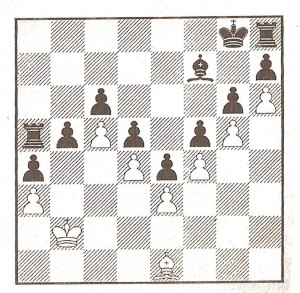

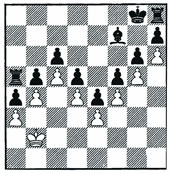

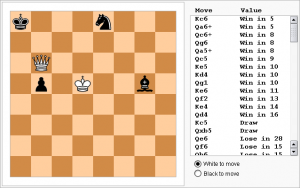

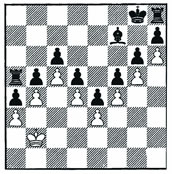

This is a real case that was proposed to Deep Thought, the machine that almost won Gary Kasparov in the 90′s (from Williamd Harston, J. Seymore& D. Norwood, at New Scientist, n.1889, 1993). In this situation, the computer pays white and has to move. When this situation was presented to Deep Thought, it took the tower. After that, the computer lost the game due to its inferior number of pieces.

When this situation is presented to an average human player, it clearly recognizes the value of the paw barrier. It is the only protection he has against the superior number of pieces of black. The human and avoids breaking it, leading the match to a draw. The person understands its value, but the computer not.

Of course you can program the machine to learn o recognize the pattern of the barrier. That doesn’t mean that the computer has grasped the meaning of the barrier, but that it has a new set of data in its table for which it has a better answer. But the demonstration that the machine doesn’t understand anything is given when you present to it the next situation.

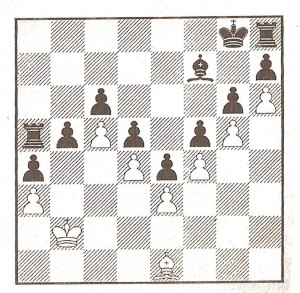

In this situation, again computer plays white. There is no barrier, but white can generate one by moving the bishop and constructing one. When the situation is presented to a computer chess program, it takes the Tower. (from a Turing test by William Harston and David Norwood)

What this situation is showing is that the machine has no understanding at all. It cannot grasp the meaning of a barrier, unless you specify in the code all the conditions for it. But even if you achieve to encode the conditions, there will be no REAL understanding at all. Some variations of the same concept are not grasped by the machine, because it doens’t have any concept at all.

The only solution to that problem that the easy approach is proposing is to have enough computational power to encode all the situations. Of course, those situations have to be previously detected by somebody in order to make available the information to the machine.

The only problem I can see with that approach when applied to real life situations like in a service robot, is that it may be not possible to achieve such comprehension having understanding out of the picture… mainly because you will never have enough resources to deal with reality.

Another example of what I mean by understanding.

Imagine that we ask our artificial system to find a natural number that is not the sum of three squared numbers.

How would easy AI solve the problem?. It would start checking all the number starting from zero and increasing up.

0 = 0^2 + 0^2 + 0^2

1 = 0^2 + 0^2 + 1^2

2 = 0^2 + 1^2 + 1^2

…

7 ! = 0^2 + 0^2 + 0^2

…

7 ! = 1^2 + 1^2 + 2^2 = 6

7 ! = 1^2 + 2^2 + 2^2 = 9 ← here it is! Seven is the number!

For this example, the AI found a proof that there is a number that is not the sum of 3 squared numbers. Easy and just a few resources used. As you can see, this is a brute force approach, but it works.

Now imagine that we want the same system to find a number that is not the sum of 4 squared numbers.

The easy AI would follow the same approach, but now it would require more resources. After having checked the first 2 million numbers are the sum of 4 squared numbers, you may start thinking that more resources are going to be needed in order to demonstrate it. You can add faster computers and better algorithms to compute the additions and squares, but the A.I. would never find it because it doesn’t exist.

There is no such a number!

How do I know that it doesn’t exist?

Because there is a theorem by Lagrange that demonstrates just that.

The human approach to solve the problem is different. We try to understand the problem, and based on this understanding find a proof instead of trying every single natural numb. That is what Lagrange did. And he did not required all the resources of the Universe!

And that is my definition of understanding and I cannot put it into better words.

Now the next question is, if I say that understanding is what is missing, how can we include it in our robots. Also how can we measure that the system has understanding?

Provided that there is no clear definition of what exactly is understanding, we know less how to embed it into a machine That is why I call this the hard approach to A.I. Hence I can only provide you with my own ideas about it.

I would say that a system has understanding about a subject when it is able to predict about the subject. It is able to predict how the subject would change if some input parameters change

I think that you understand something when you are able to predict about that something plus you are aware that you can predict it. I cannot tell you more.

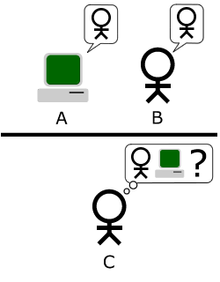

How can we test if a machine has understanding? This is also not clear. At present the standard test to discern an artificial machine is the Turing test that you all know. This means, if the machine can do the job at least as well as a person, then it is OK for us.

However this test can be fooled in the same way as the teacher was fooled by the student that knew the answers provided by someone.

And the reason is that the Turing is focusing in one single part of intelligence: the what part. From my point of view, intelligence has to be divided into two parts: the what and the how

intelligence = what + how

The what indicates what the system is able to do, for example playing chess, speak a language or grasp a ball. The how indicates how, in which way, the systems performs such thing and how many resources uses. Either looking at a table, calculating the probabilities or just reasoning about meanings.

Examples of a system with a lot of what but a few of how: the chess player, or the student at the test exam. Example of high how but low what: the gnat’s life.

The problem with current artificial intelligence is that it is only concentrated in the what part. Why? Because it is easier and provides quicker results. But also because is the one that can be measured with a Turing test.

But the how part is as important as the what. However, we have no clue about how to measure it in a system. An idea would be to use similar experiments as the ones used by psychologists. However this would only allow to measure systems up to a human level and not beyond or different (because we cannot even mentally conceive them).

To conclude,

I think that at some point in our quest for artificial intelligence we got confused about what intelligence is. Of course natural intelligence uses tables, and also calculates probabilities in order to be efficient. But it also uses understanding, something that we cannot define very well, and that we cannot measure.

Time and resources need to be dedicated to study the problem of understanding, and not just pass by it as has happened up to now.