Robot Robustness

// June 23rd, 2012 // No Comments » // Research, Work

Along the last three days I have been attending to the Robocup competition held in Mexico. This is a competition were robots confront to each other on a dynamic scenario, out of the more controlled ones of the labs. There are several leagues in which robots can compete. Concretely, I have been attending the Robocup@Home competition league, devoted to the test of Service Robots on a home scenario.

The Robocup@Home arena during training periods

In the Robocup@Home, robots must perform tasks on a home environment. They have to follow the orders of humans and help them in common situations of dayly live activities. Tests, for example, include following the owner across a chaotic environment, bring to the owner some stuff from some place, or help him clean a room.

During the competition of this year, even if the tests are very simple for a human, most of the robots failed from its very beginning. They were not able to perform what they were (suposedly) trained to do.

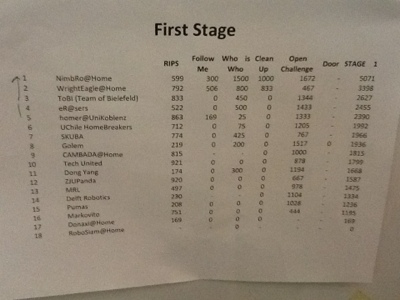

Classification table after the first stage of the competition

I am sure that most of the teams had their robots working perfectly at their labs before comming to the competition. But their performance at the competition arena was very bad. The question is then, what happenned between their working situation at the lab and the failure condition during the competition?

When the teams are asked about why their robot failed, they report that their robot just had a failure in one of its mechanical, electronical or software component. Usually they indicate that they performed a last minute change in order to adapt the robot to the new environment, and that change, triggered those errors.

There are two interesting points in that answer there:

In this post, I’m going to concentrate on the second point. The robots of the competition are not robust. This means that small changes in the conditions of working, make the robot fail.Those conditions may include last minutes changes in the robot code or hardware, but also, and more important than that, changes in conditions include differences between the testing situation at the lab and the testing situation during the competition.

Cosero robot (one of the more robust) training how to identify and grasp objects at the Robocup@Home

At the Universities, people is more concentrated on doing proof of concepts. This means that researchers and students work in order to show that something is possible at least once. Once this is demonstrated, they move to another subject to try to demonstrate that it is also possible in principle. After all, they get recognition after any new discovery or demo of possibility. So they are not interested in robustness as much as they can keep on doing more proofs of concepts.

Companies, instead, they need to have robust products in order to sell them. By robust products, I mean products that provide all the time what is expected from them. In the case of robots, the robust robot must be able to follow its master 99% of the times, in different places and locations, be able to grasp objects or understand language in almost any situation.

To achieve robust products, companies have developed all a bunch of quality assurance mechanisms that can be applied to all mechanical, electronic and software parts. They also dedicate the time to implement those mechanisms, which include unit testing, massive test of hardware under limit conditions, testing under noisy conditions, testing in simulated environments, etc.

However, companies do not feel yet attractive the Robocup competition, hence they do not use their products to participate and make the competition more interesting.

Robot engages into cyclic behavior during Robocup competition, due to lack of robustness

Since researchers do not have access to all that bunch of techniques (not because they don’t know but because they do not have time and money to implement them), the solution they have found is something intermediate. They buy as much off-the-shelf hardware as they can (Kinect cameras, Hokuyo lasers, Pioneer mobile bases, Katana arms…), and they use as much already made software as they can (let’s say ROS and other open source libraries). However, there are still some robot parts that are not available in the market, so participants must construct them themselves. And hence, a possiblitiy of failure appears…

I presume that when companies participate in the competition the level will increase since most of current failures will be avoided, and the competition will concentrate on skills development rather than in robustness achievement. Next question is then, how can we make interesting the competition to companies…

Reem-B, a product of Pal Robotics