Understanding Understanding (For Service Robots)

// February 18th, 2012 // Artificial Intelligence

The robot looks at the can. Then computes a long list of conditionals: if the pattern over there is like this, then it means that it may be a can of coke with an 80% likelihood. If instead, the pattern looks like that, then it may be a book with a 75% likelihood. If the pattern is like that other one, may be is a car. If none of those patterns are recognized, then move a little bit to the left and look again.

It may be that we humans work this way when trying to recognize an object. We explore a list of endless conditions (a set for the recognition of each concept we know) that at the end of the chain determine what we see, what we hear or what we feel with a given probability.

I don’t think so.

It would take us for ages to recognize everything we are able to recognize if that was the procedure we follow to recognize things!!!.

There is something missing in that picture that prevents us to create robots able to recognize voices, objects and situations. That is the key element we need to comprehend, in order to create artificial cognitive systems.

And I believe the important element is understanding.

Understanding is what allow us to identify what is relevant to the current situation at hands. Is what allows us to focus in what really matters. Is what makes us recognize the can, the book or the speech of your neighbour on a reasonable amount of time. We do not understand because we recognize, we recognize what we understand.

Understanding compresses the reality into chunks that we can manage. Instead of having to construct a table with all the cases, we understand the situation and by doing it we have compressed that reality into a lower dimension space that can be managed in a reasonable amount of time.

Without understanding, a machine is not able to discard all the options it has in front of it, and hence, it has to evaluate all the possibilities. That is why for a robotic system to recognize something it has to create a table with all the options and check everyone of them, which one is the one that matches the best for the current situation. Of course you can provide the machine with some tricks (most of them heuristic rules) that help it to discard obvious non useful elements and make it reduce the table significantly. But at the end, those are nothing more than that… tricks.

There is a paper that claims that checkers game has been solved [1]. By definition, a game is solved if the complete table of movements for a perfect game has been discovered, what allows the machine to exactly know at each step which is the best movement in order to win. But knowing the table and winning always doesn’t mean that the system understands any of the moves, neither the game.

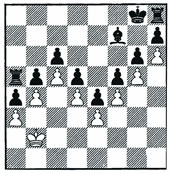

A good example of what I meant is the one chess game described by Roger Penrose on his book Shadows of the mind [2] and that is depicted in the following figure.

As is explained in this Fountain Magazine post:

In the chess game above, by just playing the king left and right, white can bring the game to a draw. But, at first to make the game a draw, the white player has to understand the situation. Since the computer has no capability to understand, it may think that it would be more profitable to take the castle and therefore it loses the game

For me, understanding is the main challenge that artificial cognitive systems must face in the close future, if we want them to be working around us as for example Service Robots).

Of course, for a system that has the whole table of all the possibilities of the Universe, understanding is not necessary. Understanding is only necessary when the resources available are small (compared with the complexity of the problem to solve). The problem of understanding understanding and reproducing it in artificial systems is then the key to successful A.I. systems.

But it shows that this is a problem a much harder than working on tables and methods to cut the explosion of possibilities. That is why A.I. researchers prefer to concentrate on using bigger computers and finding better selective searches. By doing this you can obtain quicker partial results that you can publish, sell, you name it. Even if those are very difficult things, they are easier than implementing real understanding.

Current intelligent systems are just cheaters. We provide them a piece of paper with the correct answers. At most, we provide them complex algorithms to decode the correct answers. Answers that we have prepared and know in advance. But when the answer is not on the table, the system gets lost and one can observe its non-understandability.

Now, what does it mean that a robot understands?. For me, the key element for understanding is prediction.

You cannot make a robot understand what a wheel is by showing to it a set of different wheels and make it generalize. In the case that we show to the robot a round steel plate, the robot will not identify it as a possible wheel because no example of such type would have been include into the generalization. Instead what we have to do is to provide the robot with prediction skills. This will allow it to predict how such an item will behave in the application at hands, and hence it will classify the plate as a wheel because it can predict that the plate could work round as a wheel.

We just predict. At any moment. We understand something when we can predict that thing. We understand a scene because we can predict it. We just pick the interpretation of the scene that makes sense following our limited senses. Then we are able to predict using that interpretation. If our prediction fails then we do not understand what is happening, and hence feel lost.

Having reached this point, the real question is how do we embed this prediction ability into an artificial system and make it use it for creating understanding. Now that’s another story…

References:

[1] Jonathan Schaeffer, Neil Burch, Yngvi Björnsson, Akihiro Kishimoto, Martin Müller, Robert Lake, Paul Lu, Steve Sutphen, Checkers is solved, Science, 2007

[2] Roger Penrose, Shadows of the mind, 1994

[...] to survive, adapt, learn and generate its own goals (you can read what I mean by understanding at this blog post). I think that understanding, and only this is the special characteristic that defines a system as [...]